The field of healthcare is undergoing rapid changes, with AI at the forefront of this shift. Google is continually expanding the possibilities in this sector, having recently introduced two major innovations in healthcare AI: MedGemma and MedSigLIP.

These models are designed to help with analyzing X-rays, medical scans, and patient records, giving doctors a powerful second opinion. It’s all part of Google’s broader push to make healthcare more efficient, accurate, and faster.

While Google has previously made headlines with its healthcare AI initiatives, particularly with Med-PaLM, these new models take it further by adopting a specialized, multimodal strategy. This means they can understand images and clinical text which is a big win for medical practitioners and researchers.

What are MedGemma and MedSigLIP?

These are not just step-by-step progressive updates; they are purpose-built AI models designed to tackle the complexities of medical data:

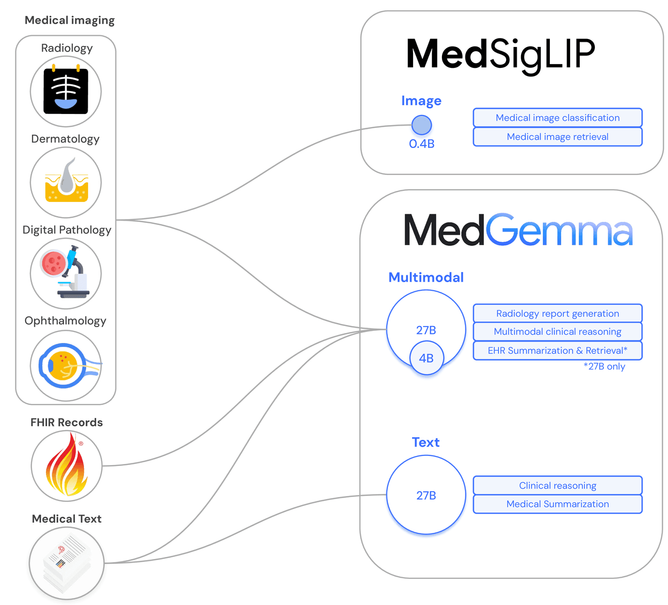

- MedGemma: This is a collection of multimodal generative models that have been specifically refined for the healthcare sector. Based on the strong framework of Google’s Gemma models, MedGemma is intended to simultaneously interpret and analyze both medical imagery and text.

This capability allows it to surpass merely evaluating a radiology report or an image on its own; it can synthesize information from various sources, including patient histories, clinical notes, and different medical scans, to offer a more comprehensive and contextual perspective.

MedGemma excels in tasks that involve text generation, such as creating reports for medical images or responding to natural language inquiries regarding intricate medical situations.

- MedSigLIP: This is a compact, dedicated image and text encoder tailored for healthcare uses. MedSigLIP stands out in encoding medical images and text into a unified embedding space, making it well-suited for tasks that involve structured outputs rather than generating text. This includes:

- Data-efficient medical image classification: Recognizing conditions in radiology, dermatology, ophthalmology, and histopathology images by using less labeled data.

- Zero-shot classification: Categorizing images without being trained on specific examples. AI connects what it sees in an image with what it knows from text.

- Semantic image retrieval: Effectively searching for and ranking medical images according to text queries.

The Power of Multimodality and Specialized Training

MedGemma and MedSigLIP’s multimodal capabilities and extensive training on de-identified medical datasets are their real strengths. These new features, in contrast to general-purpose AI models, have picked up on the subtleties and complexity of clinical reasoning, medical language, and imagery, making them far better suited for real-world healthcare use.

Consider an artificial intelligence (AI) system that is able to:

- Examine a chest X-ray, compare it to the patient’s electronic health record (EHR), and spot minute patterns that could point to an early-stage ailment while taking the patient’s entire medical history into account.

- Produce precise and thorough radiology reports to free up radiologists’ time for more in-depth analysis.

- Find comparable cases for comparison or research by effectively searching through enormous archives of medical images.

- Provide evidence-based insights to support diagnostic decisions while assisting with intricate clinical reasoning tasks.

Impact on Healthcare: A Glimpse into the Future

The launch of MedGemma and MedSigLIP is set to create several transformative effects within the healthcare landscape:

- Improved Diagnostic Precision: By interpreting multimodal data and utilizing clinical reasoning, these models can assist in detecting diseases earlier and with greater accuracy, potentially lowering diagnostic inaccuracies.

- Optimized Workflows: By automating processes such as report creation, data extraction from EHRs, and preliminary symptom evaluation, healthcare professionals can devote more time to direct patient care.

- Accelerated Medical Research: The capability to swiftly analyze extensive datasets of medical images and textual information can significantly enhance the speed of drug discovery, identification of clinical trials, and comprehension of disease evolution.

- Enhanced Accessibility: Lighter models such as MedSigLIP, which can operate on devices with lower power requirements, enable AI-driven diagnostics and decision support in remote or resource-limited environments, helping to close gaps in healthcare access.

- Customized Medicine: By evaluating an individual’s distinct data points, these AI models can support the development of more personalized treatment strategies and medication suggestions.

A Step Towards Responsible AI in Medicine

Google indicates that even though these models show remarkable potential, their main purpose is to serve as developer tools and a foundation for creating reliable healthcare applications. Before being widely used in clinical settings, comprehensive real-world testing, validation, and adherence to responsible AI principles such as fairness, privacy, equity, transparency, and accountability are essential.

Conclusion

Google’s MedGemma and MedSigLIP are more than just new features; they are part of a larger, more ambitious plan to use specialized AI to improve healthcare. These multimodal models have the potential to revolutionize diagnosis, treatment, and medical research by revealing deeper insights from complex medical data, ultimately leading to a healthier future for everybody.